Cool Computing: Strategies for Battery-Efficient On-Device AI in Smartphones

Smartphones already translate speech in real time, enhance photos as you shoot them, and track health signals throughout the day. More of this work now happens directly on the device instead of on remote servers. That shift makes phones faster and more private, but it also puts new strain on batteries and thermal systems. On-device AI only works at scale if it stays within tight power limits.

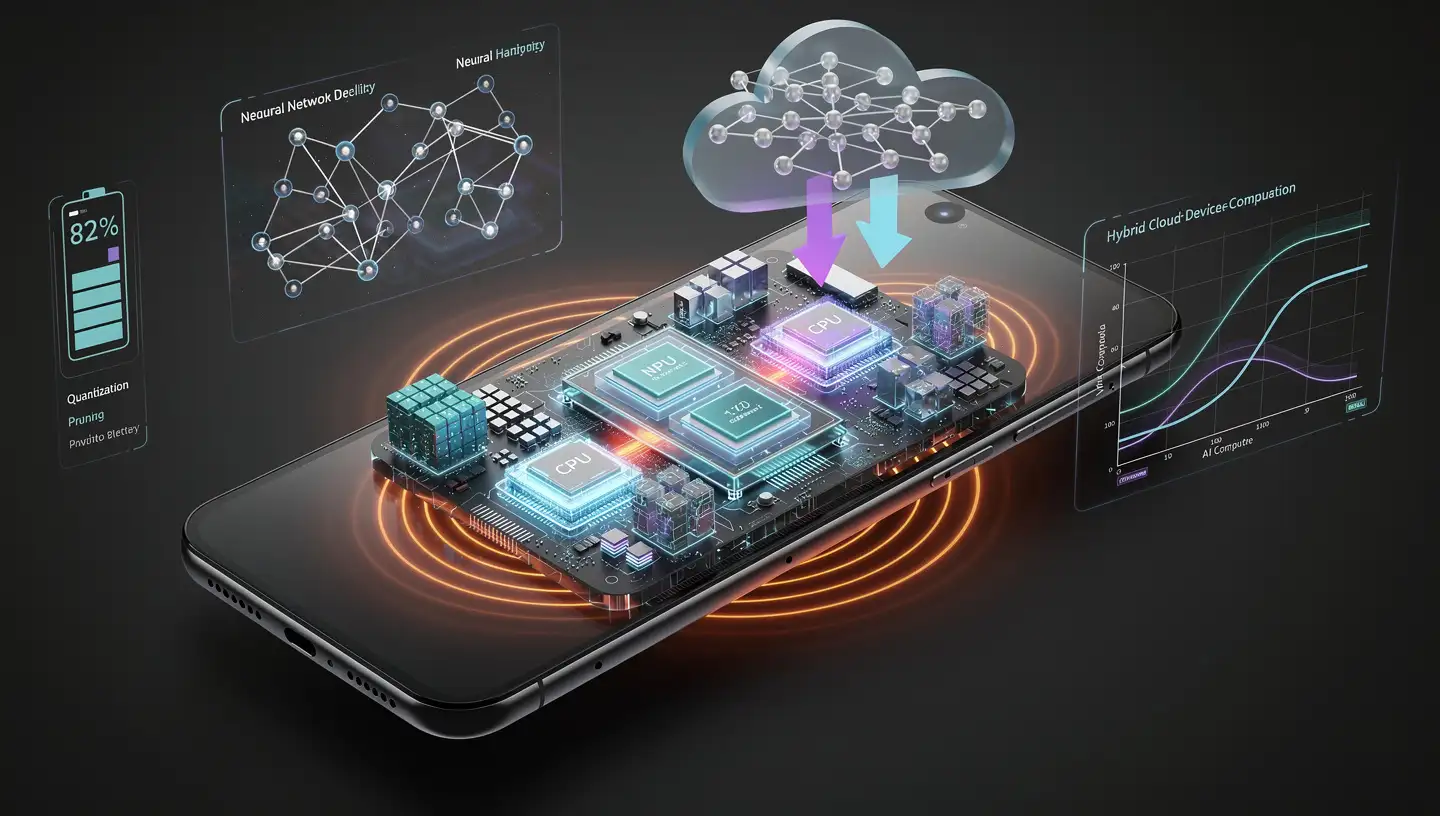

The rise of on-device AI and its energy challenges

On-device AI refers to models that run locally on hardware such as smartphones, wearables, IoT devices, and in-car systems, instead of sending data to the cloud. Adoption is accelerating as manufacturers push for low-latency and low-power computing at the edge.

Processing data locally can cut latency by roughly 80 to 90 percent compared with cloud-based AI. That matters for tasks like voice assistants, camera processing, and accessibility features, where delays are noticeable and network access is unreliable. It also reduces energy spent on wireless transmission.

The trade-off is power draw. Modern AI models are computationally heavy, and smartphones operate within strict limits on battery capacity and heat dissipation. Poorly optimized models drain batteries quickly and trigger thermal throttling, which slows performance and degrades the user experience. As a result, battery optimization and model compression are no longer optional. They are core design constraints.

This pressure is already shaping the market. Real-time inference engines, secure boot pipelines, and energy-aware algorithms are becoming standard, driven by the need to control power consumption and heat on small devices.

Why on-device AI matters beyond phones

The main technical advantage of on-device AI is speed without network dependence. Local processing avoids round trips to the cloud and delivers near-instant responses, with measured latency reductions of up to 80 to 90 percent. That efficiency explains why the approach is spreading beyond smartphones.

In cars, on-device AI supports advanced driver-assistance systems that must respond in real time, even without connectivity. Local inference improves safety and reduces energy use tied to constant data transmission. In healthcare wearables, on-device models enable continuous monitoring and instant alerts while keeping sensitive data on the device, which both conserves power and limits exposure.

Growth is also driven by improvements in edge computing hardware, local model training, and accuracy evaluation methods tailored for constrained devices. These factors make it possible to deploy AI across industries without assuming unlimited power or bandwidth.

Hardware foundations: SoCs, NPUs, and AI accelerators

Battery-efficient on-device AI depends heavily on hardware design. Modern smartphone system-on-chips combine CPUs, GPUs, memory, and dedicated AI accelerators in a single package. Compared with earlier generations, today’s SoCs offer higher compute density and better thermal control, allowing more complex models to run locally without excessive heat.

A key component is the neural processing unit, or NPU. NPUs are designed specifically for neural network operations and can execute inference faster and with less power than general-purpose CPUs. They are often paired with GPUs to handle workloads such as large language model inference.

Samsung’s Exynos 2200 SoC, for example, includes an NPU rated at up to 26 trillion operations per second (TOPS). Google’s Tensor G5 is rated at 19 TOPS. In practice, this enables tasks like real-time speech transcription on devices such as the Galaxy S22 series with lower energy use and less heat than CPU-only processing. Higher TOPS can translate into faster inference at lower power, although real-world gains depend on workload characteristics and thermal limits.

Researchers benchmarking large language models on mobile hardware show that factors like cache size and memory bandwidth strongly affect inference speed and energy use. Lightweight models such as Llama2-7B and Mistral-7B can now run offline on mobile platforms, reducing the need for data transmission. Running a 7-billion-parameter model with 4-bit quantization further lowers power consumption and heat output.

Developers rely on profiling tools such as Snapdragon Profiler and Arm Streamline to track CPU and GPU utilization, frequency scaling, and thermal throttling during inference. These measurements inform both hardware selection and software optimization.

Software techniques that cut power use

Hardware alone is not enough. Software optimization determines how efficiently that hardware is used.

Mixed-precision computing is one of the most effective techniques. By running most operations in half-precision floating point (FP16) instead of full precision, models use less memory and execute faster. Frameworks such as TensorFlow Lite support this approach and report speedups of up to three times on compute-heavy models, with little or no loss in accuracy.

Quantization goes further by reducing numerical precision to formats such as 8-bit or even 4-bit integers. This shrinks model size and accelerates inference while keeping accuracy within acceptable bounds, directly reducing battery drain. In practice, a single model can behave very differently on the same phone depending on how aggressively it is quantized:

Model setup | Approx. memory use | Relative latency (vs FP16) | Relative power use |

|---|---|---|---|

7B parameters, FP16 | 1.0× | 1.0× | 1.0× |

7B parameters, INT8 | ~0.5× | ~0.7× | ~0.7× |

7B parameters, 4‑bit | ~0.25× | ~0.5× | ~0.5× |

These ratios are indicative rather than device-specific, but they illustrate how lower precision quickly translates into less work per token and lower energy use on mobile NPUs.

Pruning complements quantization by removing redundant weights, cutting computation without harming performance. Together, pruning and quantization let developers move from "what fits in memory" to "what fits within a realistic battery budget for a typical user session."

On-device learning adds another layer. Instead of sending data to the cloud for retraining, models adapt locally using user data, which avoids network energy costs and supports personalization in changing environments. The downside is additional local computation, which requires careful power budgeting and thermal control, so effective implementations combine mixed precision and memory-aware design to keep energy use manageable.

Scheduling and NPU offloading

How and when AI workloads run also matters. Intelligent scheduling systems decide whether tasks run on CPUs, GPUs, or NPUs based on power and thermal conditions.

Offloading inference to NPUs significantly reduces CPU load and heat. In Android devices such as Google Pixel and Samsung Galaxy phones, routing tasks like image recognition to NPUs extends battery life and smooths performance. Profiling shows that adjusting operating frequencies during inference helps avoid thermal throttling, which would otherwise negate efficiency gains.

Benchmarking studies highlight the role of scheduling in avoiding bottlenecks during large model execution. Some Pixel devices dynamically scale NPU usage based on battery level, reducing power draw as charge drops. This kind of system-level control is increasingly important as models grow larger.

User-centric patterns: offline and hybrid AI

From the user’s perspective, the most effective AI features are those that respect battery limits. Intermittent offline inference is one common pattern. Running models locally through frameworks such as TensorFlow Lite or CoreML provides fast responses and works without connectivity. Samsung Galaxy camera apps use this approach to enhance photos in real time while keeping power use in check.

Hybrid designs combine local and cloud inference. Routine, low-energy tasks run on the device, while heavier workloads are sent to the cloud when necessary. Voice assistants on Galaxy devices use this split to manage temperature during intensive queries while preserving battery life for everyday use. Some systems also pause non-essential AI features when battery levels fall below thresholds such as 20 percent, as seen on iPhones and Pixels.

Educational and navigation apps use similar hybrids. Local models handle quick predictions and offline access, while cloud resources step in for larger updates. Mixed precision and quantization reduce latency and energy use in both cases.

Thermal limits and what comes next

Despite steady progress, heat remains a limiting factor. Sustained AI workloads can still trigger throttling, reducing performance and efficiency. On-device learning increases this risk if power budgets are not tightly managed. Interactions between operating systems and hardware further complicate thermal control, leading to inconsistent behavior across devices.

Future work focuses on more efficient real-time inference engines, better edge training methods, and tighter integration between model design and hardware evaluation. The goal is to maintain accuracy without pushing devices past thermal limits.

Conclusion

On-device AI is reshaping smartphones, but only within the boundaries set by batteries and heat. Dedicated accelerators, compressed models, mixed precision, and careful scheduling make local inference practical at scale. Hybrid designs and offline patterns keep power use predictable for users.

As hardware and software co-evolve, these strategies will determine whether AI features remain useful throughout a full day on a single charge. Sustainable on-device AI is less about ambition and more about discipline, measured in milliwatts and degrees Celsius.